Just to catch you up

It also has benefited me, when I have not been sure how we could show something, so I have just vaguely instructed it to build out something and then seen what it has come up with. Sometimes it hits the mark, sometimes you need to throw it through the machine again, but generally speaking it's allowed me to not get bogged down with bike shedding elements of the site. Also, sometimes it goes above and beyond, and these happy little accidents have made their way into production.

Where this rabbit hole starts

So on to this last couple of weeks of development, Trav is away on a much deserved holiday. Left to my own devices, I decided it was the perfect opportunity to tackle something that's been bugging our testers: the inability to manage uploaded products effectively.

The current simple product listing quickly becomes unmanageable with a sprawling set of previously uploaded products, couches, tables, lamps—all cluttering the interface in a somewhat random order. I figured categorisation was the first step to tidying this up.

LookSee already behind the scenes does a bunch of processing prior to merging it into your spaces, but the categorisation are open to the AI to determine the best label. Terms like "sofa" and "couch" appeared separately, making organisation challenging. This works fine for the backend logic, but doesn't work so much for users to filter products.

It was time to standardise. I researched a bunch of different categorisation standards and decided on using the UNSPSC (United Nations Standard Products and Services Code), which provides a universally recognised framework for product categorisation. It's comprehensive, internationally recognised, and ideal for streamlining our listings. (You can learn more about UNSPSC codes here).

Down we go

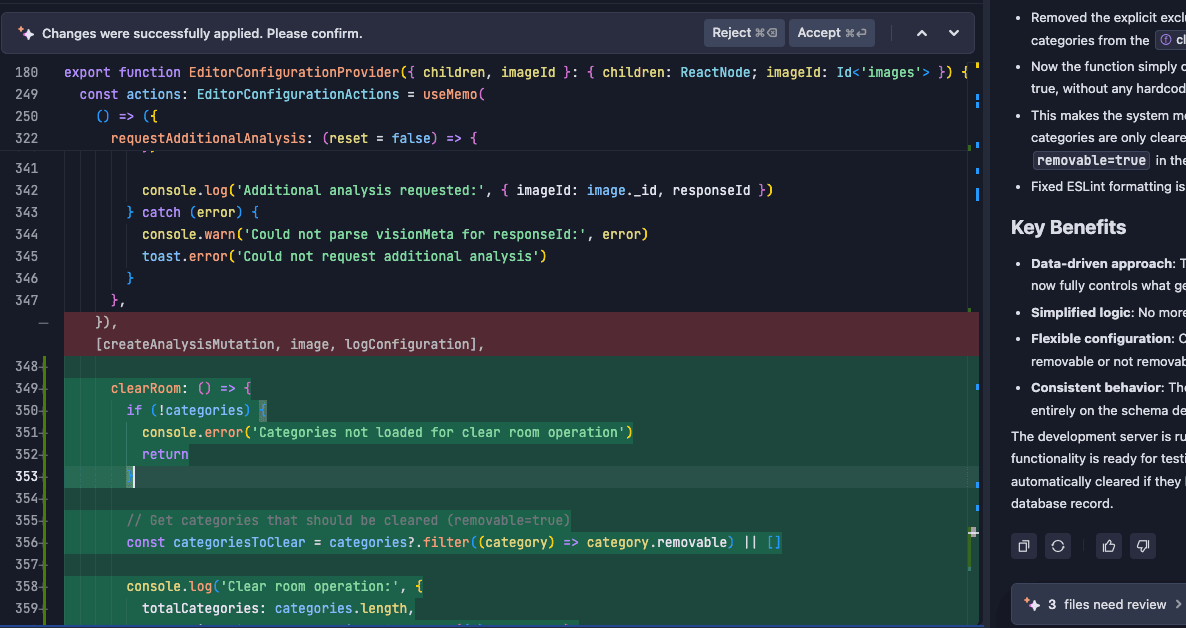

AI helped quickly build out the DB and although I didn't ask for it, it built out an administration console to manage it (which I needed a nice way to upload, but realistically speaking once it's in, it doesn't change). This was one of the first of what I figured was 'happy little accidents' on this journey. I began to cull the UNSPSC taxonomy to the useful ones for LookSee and got it into the dev server. Started on the product listing which also added an editor for the products. I then had to map different parts of the UNSPSC so it made sense for users and the organisation seemed promising. Encouraged by the ease of implementation, I moved forward enthusiastically, adding pagination, search and filtering capabilities. Each addition seemed straightforward, facilitated by AI-driven tools. About 4 days later, I found myself trying to finalise a pull request (PR) with over 3,000 lines of new code.

So with most development 4 days isn't overly long. But in recent LookSee development where I have easily shipped multiple PRs in a day, 4 days felt like a month. In my previous position, I generally pushed for smaller PRs, not only so changes were compartmentalised, but it meant reviewing was much easier than having to dig through mountains of changes. So this PR was getting huge and it was already starting to feel a bit stale with code not being shipped as quickly as previously.

The rabbit hole doesn't bottom out

Then issues began to surface. Filtering wasn't working correctly, especially in combination with pagination. It wasn’t exactly broken, but neither was it performant nor intuitive. After a couple of hours of frustration, and with dinner waiting, I stepped away. A nagging suspicion had already set in that perhaps I'd gone down the wrong path, but stubbornly, I hoped a quick fix would emerge.

Cue bedtime, where any hope of peaceful sleep quickly vanished. My brain churned through possible solutions, weighed down by the realisation that our database, Convex (similar to Mongo or a document store), doesn't feel well suited for the kind of filtering I'd implemented. I'm sure this is more a lack of understanding on my part - it's not like I was seeing performance issues and I feel I was implementing things as per their docs and guides, but my brain has a tendency to join the dots about 3 steps past what I'm working on and it feels like it could be a bottleneck. Efficient filtering at scale could easily be achieved in relational databases like Postgres or MySQL, but I'm not sure how Convex will compare performance wise (or stupidly, if this will ever be an issue). Also to note, Convex is built by some phenomenal people who know scale, so I'm probably just over thinking this.

Finally realising you are in a rabbit hole

Around 2am, clarity struck. "What was I even trying to achieve?" I asked myself. All I'd needed was a simple editor for deleting items. Instead, I had crafted an overly complicated, over engineered (and a term from a previous job - gold plating), buggy feature. The ease of AI-enhanced tools had drawn me too far down an unnecessary path.

Accepting this, I shelved the bloated branch. More frustrating was having to extract the useful fixes into a clean and manageable pull request, reinforcing that I need to keep PRs small and targeted. Ironically, just as I thought I'd finally relax, my brain switched gears again, deciding this would make a good blog post. Cue another round of mental note-taking about the mistakes I had just made.

It was a tough lesson but the right call. I still believe using UNSPSC is a good idea, and I plan to incorporate it later to properly standardise categorisation. Just not intertwined with complex editors and pagination.

AI tools undoubtedly accelerate development, but they also amplify mistakes. You can quickly find yourself deeper into a problem, making eventual regret feel significantly worse.

Closing thoughts

For now, I'm calling it a day. Live and learn, I suppose. I'm not sure how solo developers survive. Not due to lack of ability but more just bouncing ideas and having someone else to rationalise thoughts with and ensure you are doing what's best for the project, and not just what you want to do (or think you need to do).

Also to note, Trav is never allowed to go on leave again.